Two weeks ago, I was working with Opus 4.5 and, for the first time since working with Claude Code, I’d hit my limit for the model until it reset an hour later.

Yes, Claude Code has a /usage command to tell us exactly where we stand when using the project but if I have a set of agents or subagents working on something, I’d prefer not to interrupt their process to check usage. And if we stop them or stop what we’re doing to open yet another session just to check usage, it’s cumbersome.

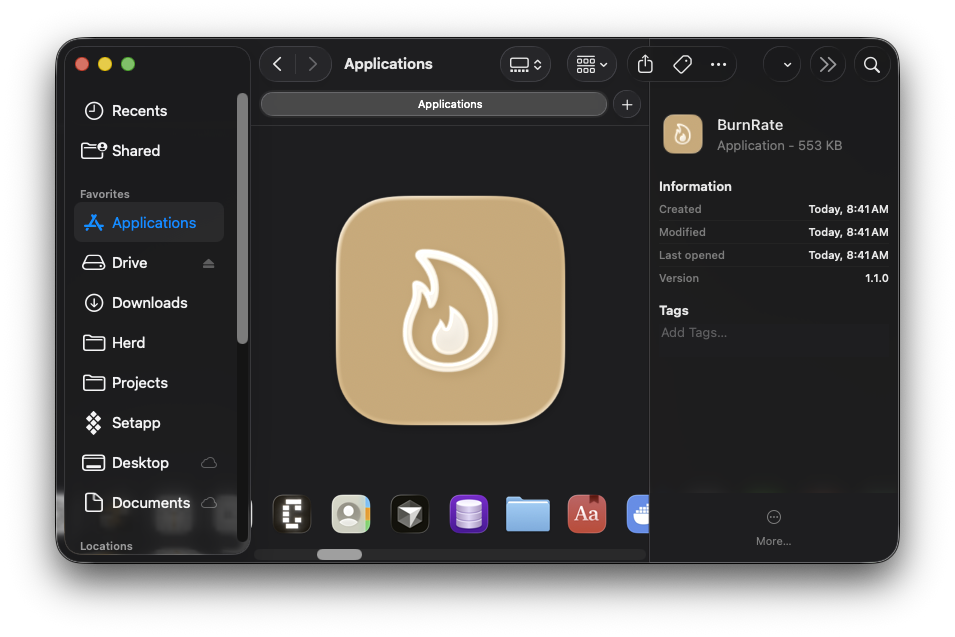

I wanted something I could glance at without breaking focus and that’s exactly what BurnRate is.

Continue reading