This is one of set of posts that I hesitated writing because I don’t know how applicable it really is because it’s going to be dealing with talking about how to achieve something at an abstract level without using any real code examples.

And it’s not that I think talking about algorithms or procedures without code is bad (we all use pseudo-code, right?), I’m just not sure if it’s one of those series of articles that’ll help someone or not.

But I can’t be the only one who’s encountered these issues, so I’ll give it a shot. And this is going to be spread out over a few posts.

One of the more common tasks that you’ll see in web application development deals with importing data usually in some file type (often times which is CSV though other file formats are becoming more popular). A challenge, though, with importing data via CSV – especially a large data set – comes with giving the user visual feedback as to how the process is going.

And when it comes to importing CSV files into WordPress, the same rules apple. Importing large data sets and relying on the API to take care of, say, creating posts, associated taxonomies, and adding media can be a time consuming process.

But there are some strategies that we can employ that will help to ensure:

- There won’t be any PHP timeouts

- The importer gives visual cues without blocking the page load

- The functionality cleans up after itself when it’s done.

Though there are a number of ways to attack this particular problem, here’s how I’ve been doing it, as of late.

Importing CSV Files into WordPress

Obviously, the times during which you’d be importing files is when you have data in a CSV format that needs to be parsed, processed, and stored in the WordPress database.

There’s a lot of things to handle during this process such as error checking, sanitization, and all of that jazz, but all of that is outside the scope of this particular series. Instead, this is more about breaking the process by which you can efficiently import data, give user feedback, and clean up the residual data when done.

One thing to note is that some imports are quicker than others – that is, perhaps the file that’s being imported is small, the data that has to be added is small, and/or perhaps there are very few API calls and/or queries that have to be made when import process is happening.

But assume you’re given the a CSV file that contains records for importing hundreds (to thousands and upwards) of rows of data each of which corresponds to a custom post type having its own set of custom terms for custom taxonomies.

There’s a lot to handle. You want to check…

- Titles (or another property) to see if the post already exists, and skip it if it does exist

- Taxonomies and terms to see if they need if they already exist or need to be created

- Ensure that you’re appending terms rather than overwriting what may exist on a post that already exists.

- …and so on.

This can be a time consuming process not just from a programmatic standpoint, but from actually importing the data. A the reason that this can be a problem is because:

- The user selects a file to upload

- They click on the ‘Upload’ button

- The process kicks off and the page blocks until the process is complete

- There may or may not be a timeout on the server

This means that the user has very little feedback as to what’s happening, and the page continues to load for minutes while the data is processing or even stops execution because the server terminates the script.

Bummer.

But there are a few things to do that I’ve already mentioned to tackle this problem in order to make sure that we’re doing what we can to mitigate any potential PHP timeouts and to display progress to the user.

To do this, though, it requires a bit of Ajax so if you’re not familiar with how to do this in WordPress, then I recommend checking out the associated Codex article. Though I won’t be talking about it in this article, it’ll be necessary in the next few.

1. Preventing PHP Timeouts

Every web server has some period of time at which they terminate the execution of a PHP script. On localhost, it’s really easy to get lazy and set an extraordinarily high number, but you don’t always have that control on servers to which you’re deploying your work.

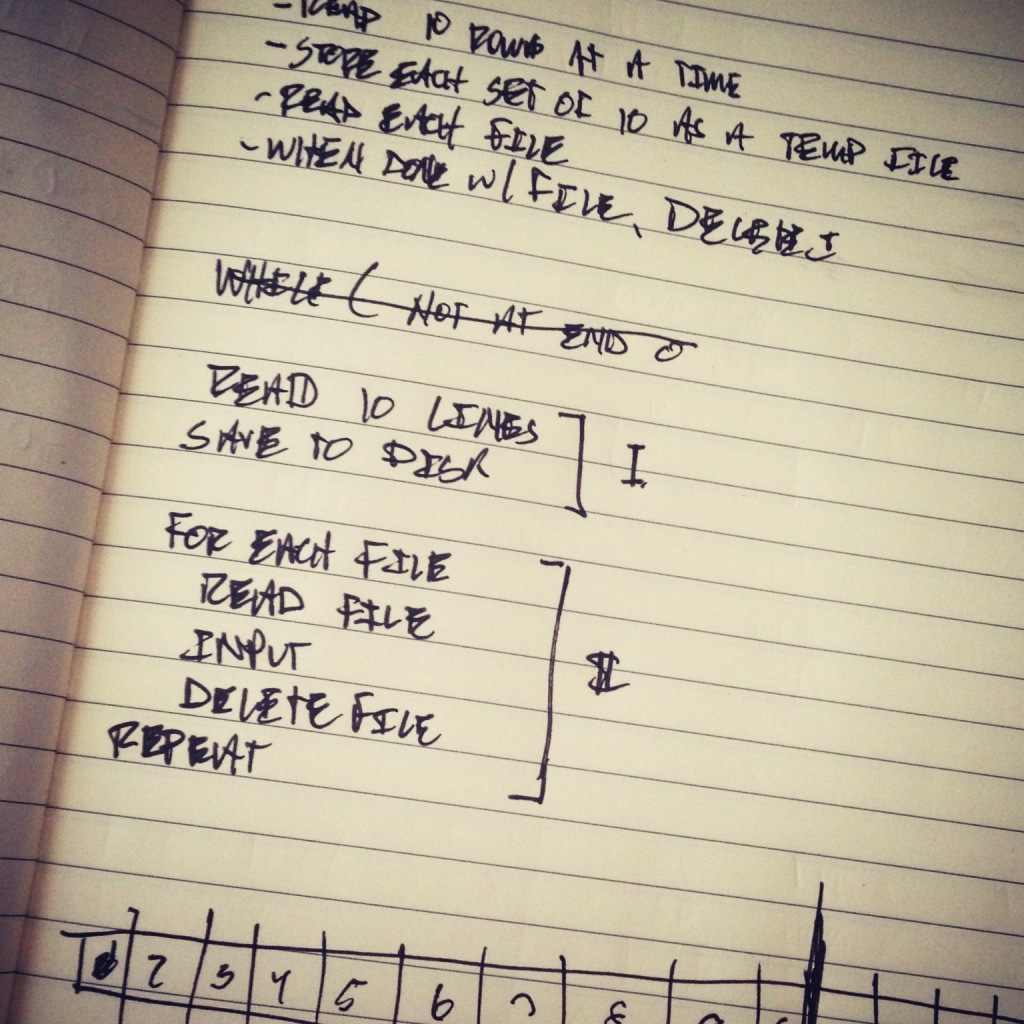

To address the issue of PHP timeouts, the first thing that I’ve found useful is the split the incoming CSV into a number of files. You can do this a number of ways, but for the sake of this article, I figured using 10 rows is easy enough.

First, we need to determine how many files we need to write:

- Read the contents of the file and `explode` each line into an index of an array.

- Count the total number of rows and store it in a variable.

- Store the title row (since it’s needed at the top of each file) in its own variable.

- Divide the total number of rows by 10 to determine how many files are needed.

- Use the modulus operator to determine how many rows will be written to the final file (since it’s not likely to be a number evenly divided by 10).

Then we need to actually write the files, so:

- From zero up to the total number of rows of 10, create a file that contains the title row and then 10 rows of data from the CSV.

- Write the remaining rows into a final file prepending the file with the title row.

You can name the files whatever you like, but I tend to be partial to suffixing the file with _n where nn is equal to the number of files that will ultimately be written out.

So an example file may be acme_csv_11.csv.

Here’s a non-production ready PHP-based example. Again, this is just for demonstration purposes. I wouldn’t recommend copy, pasting, or even using this exactly as written.

Having small, multiple files improves a number of things:

- It makes it far less likely for PHP timeouts to happen because the script it executing on a smaller file

- It makes it easy to compare data from what’s in the file to what’s being imported

- It makes it possible to display a progress bar (via Ajax) to give the user feedback.

Not bad, right?

More To Come

Over the next few posts, I’ll be walking through the rest of the steps – from importing the files, displaying progress, and cleaning up after yourself, but this first post is simply to build a case as for why dividing up large files matters, and how it can mitigate timeouts.

Leave a Reply

You must be logged in to post a comment.