For the last few months, I’ve been doing a lot of work with Google Cloud Functions (along with a set of their other tools such as Cloud Storage, PubSub, and Cloud Jobs, etc.).

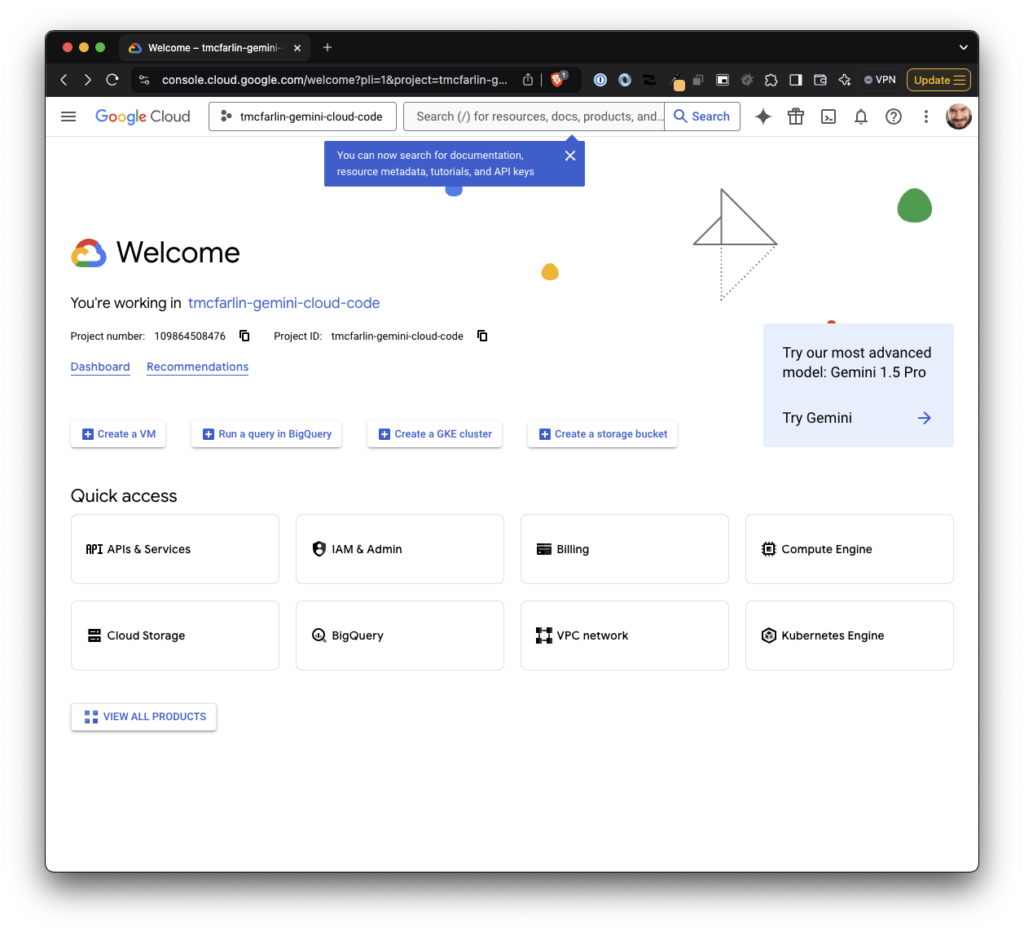

The ability to build systems on top of Google’s Cloud Platform (or GCP) is great. Though the service has a small learning curve in terms of getting familiar with how to use it, the UI looks exactly like what you’d expect from a team of developers responsible for creating such a product.

An Aside on UIs

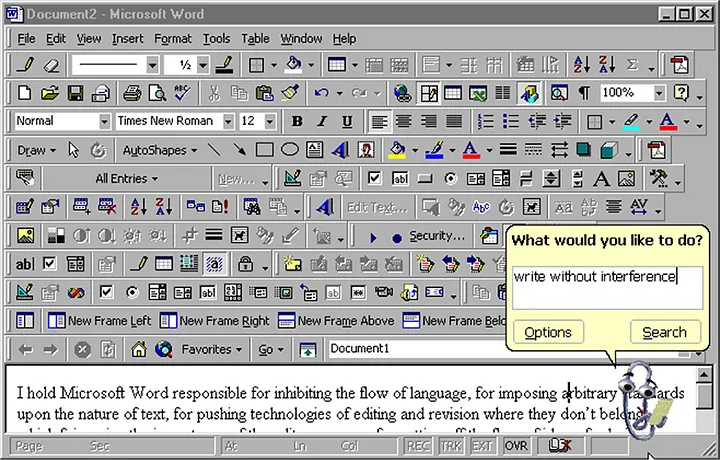

Remember how UIs used to look in the late 90s and early 00s? The joke was something like “How this application would look when designed by a programmer.”

UX Planet has a comic that captures this:

I can’t help but think of this whenever I am working in the Google Cloud Platform: Extremely powerful utilities with a UI that was clearly designed by the same types of people who would use it.

All that aside, the documentation is pretty good – using Gemini to work with it is better – and they offer a CLI which makes dealing with the various systems much easier.

With all of that commentary aside, there are a few things I’ve found to be useful in each project in which I’m involved when they utilize features of GCP.

Specifically, if you’re working with Google’s Cloud Platform and are using PHP (I favor PHP 8.2 but to each their own, I guess), here are some things that I use in each project to make sure I can focus on solving the problem at hand without navigating too much overhead in setting up a project.

Locally Developing Google Cloud Functions

Prerequisites

- The gcloud CLI. This is the command-line tool provided by Google for interacting with Google Cloud Platform. The difference in this and the rest of the packages is that this is a utility to connect your system to Google’s infrastructure. The rest of the packages I’m listing on PHP libraries.

- vlucas/hpdotenv. I use this package to maintain a local copy of environmental variables in a

.envfile. This is used to work as a local substitute for anything I store in Google Secrets Manager. - google/cloud-functions-framework. This is the Google-maintained library for interacting with Cloud Functions. It’s what gives us the ability to work with Google Cloud-based function locally while also deploying code to our Google Cloud project.

- google/cloud-storage. Not every project will serialize data to Google Cloud Storage, but this package is what allows us to read and write data to Google Cloud Storage buckets. It allows us to write to buckets from our local machines just as if it were a cloud function.

- google/cloud-pubsub. This is the library I use to publish and subscribe to messages when writing to Google’s messaging system. It’s ideal for queuing up messages and then processing them asynchronously.

Organization

Though we’re free to organize code however we like, I’ve developed enough GCP-based solutions that I have a specific way that I like to organize my project directories so there’s parity between what my team and I will see whenever we login to GCP.

It’s simple: The top level directory is named the same as the Google Cloud Project. Each subdirectory represents a single Google Cloud Function.

So if I have a cloud project called acme-cloud-functions and then there are three functions contained in the project, then the structure make look something like this:

tm-cloud-functions/

├── process-user-info/

├── verify-certificate-expiration/

└── export-site-data/This makes it easy to know what project in which I’m working and it makes it easy to work directly on a single Cloud Function by navigating to that subdirectory.

Further, those subdirectories are self-contained such that they maintain their own composer.json configuration, vendor directories, .env files for local environmental variables, and other function-specific dependencies, files, and code.

So the final structure of the directory looks something like this:

tm-cloud-functions/

├── process-user-info/

│ ├── src/

│ ├── vendor/

│ ├── index.php

│ ├── composer.json

│ ├── composer.lock

│ ├── .env

│ └── ...

├── verify-certificate-expiration/

│ ├── src/

│ ├── vendor/

│ ├── index.php

│ ├── composer.json

│ ├── composer.lock

│ ├── .env

│ └── ...

└── export-site-data/

├── src/

├── vendor/

├── index.php

├── composer.json

├── composer.lock

├── .env

└── ...Testing

Assuming the system has been authenticated with Google via the CLI application, testing the function is easy.

First, make sure you’re authenticated with the same Google account that has access to GCP:

$ gcloud auth loginThe set the project ID equal to what’s in the GCP project:

$ gcloud config set project [PROJECT-ID]Once done, verify the following is part the composer.json file:

"scripts": {

"functions": "FUNCTION_TARGET=[main-function] php vendor/google/cloud-functions-framework/router.php",

"deploy": [

"..."

]

},Specifically, for the scripts section of the composer.json file, add the functions command that will invoke the Google Cloud Functions library. This will then, in turn, allow you to run your local code as if you were writing it in the Google Cloud UI. And if there are errors, notices, warnings, etc., they’ll appear in the console.

To run your function locally, run the following command:

$ composer functionsFurther, if you’ve got Xdebug installed, you can even step through your code. (And if you’re using Herd and Visual Studio Code, I’ve a guide for that.)

Deployment

Next, in composer.json, add the following line to your the deploy section as referenced above:

"deploy": [

"gcloud functions deploy [function-name] --project=[project-id] --region=us-central1 --runtime=php82 --trigger-http --source=. --entry-point=[main-function]"

]Make sure the following values are set:

function-nameis the name of the Google Cloud Function set up in the GCP UI.project-idis the same ID referenced earlier in the article.main-functionis whatever the entry point is for your Google Cloud Function. Oftentimes, Google’s boilerplate generateshelloHttpor something similar. I prefer to usemain.

Then, when you’ve tested your function and are ready to deploy it to GCP, you can run the following command:

$ composer deployThis will take your code and all necessary assets, bundle it, and send it to GCP. This function can then be accessed based on however you’ve configured it (for example, using authenticated HTTP access).

Note: Much like .gitignore, if you’re looking to deploy code to Google Cloud Functions and want to prevent deploying certain files, you can use a

.gcloudignore

Conclusion

Ultimately, there’s still provisioning that’s required on the web to set up certain aspects of a project. But once the general infrastructure is in place, it’s easy to start running everything locally from testing to deployment.

And, as demonstrated, it’s not limited to functions but also to working with Google Cloud Storage, PubSub, Secrets Manager, and other features.

Finally, props to Christoff and Ivelina for also providing some guidance along setting up some of this.