TL;DR: I’m what you’d call a social networking skeptic and I have little trust in the services we use to house our data.

I’ve been thinking about writing a post like this for sometime. It’s not controversial and it won’t result in a lot of discussion, but it’s something important to me and it took a while to articulate everything it is that I wanted to say.

Originally, it started off as something like this:

When it comes to working with data and applications, it’s important I have the ability to own my data.

But that isn’t completely true, so hear me out.

I know some people believe that open source gives them the ability to own all of the information that they give to the application, but that’s not always the case.

First, I think using nothing but open source as a personal philosophy is great. It’s not something that I personally choose to do, but I absolutely understand it and it does have a certain allure to it.

Secondly, when working with open source software, that doesn’t necessarily mean that the information that you’re giving an application will continue to be own solely by you. Take, for example, Instagram.

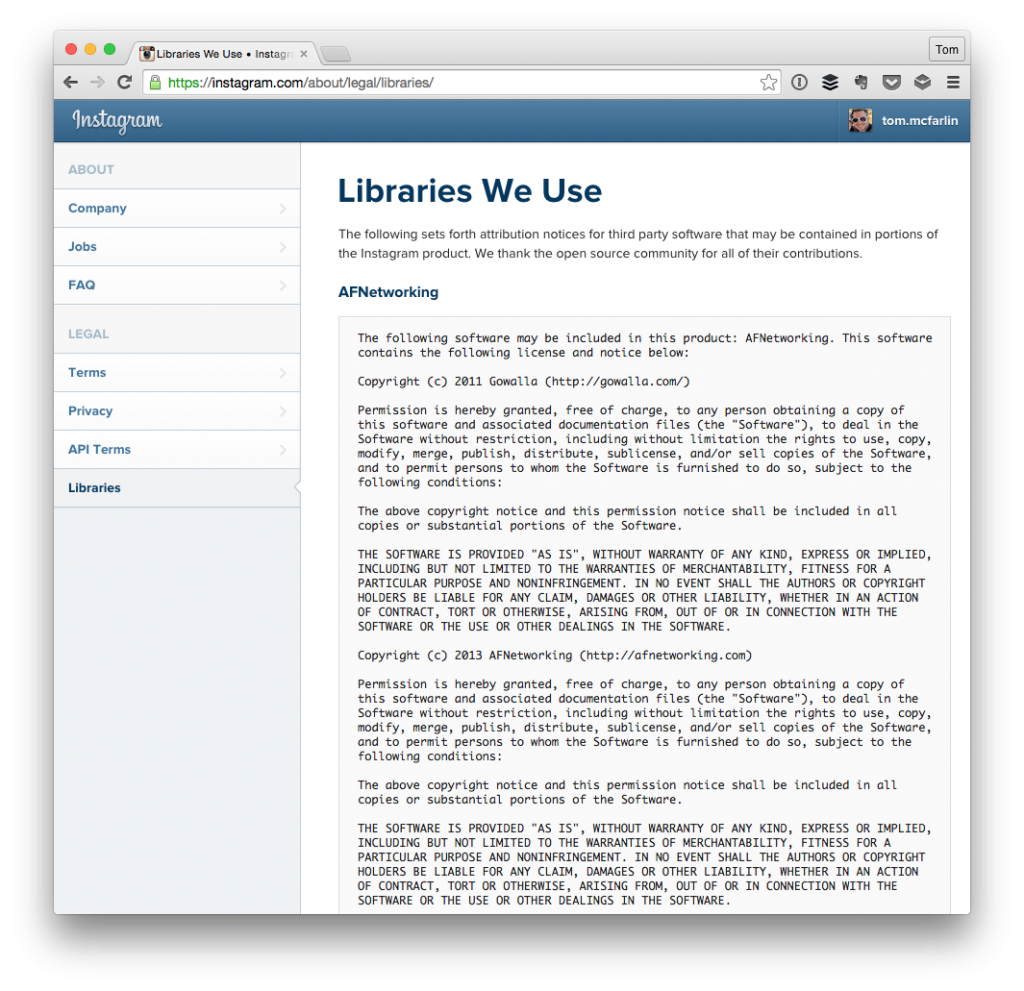

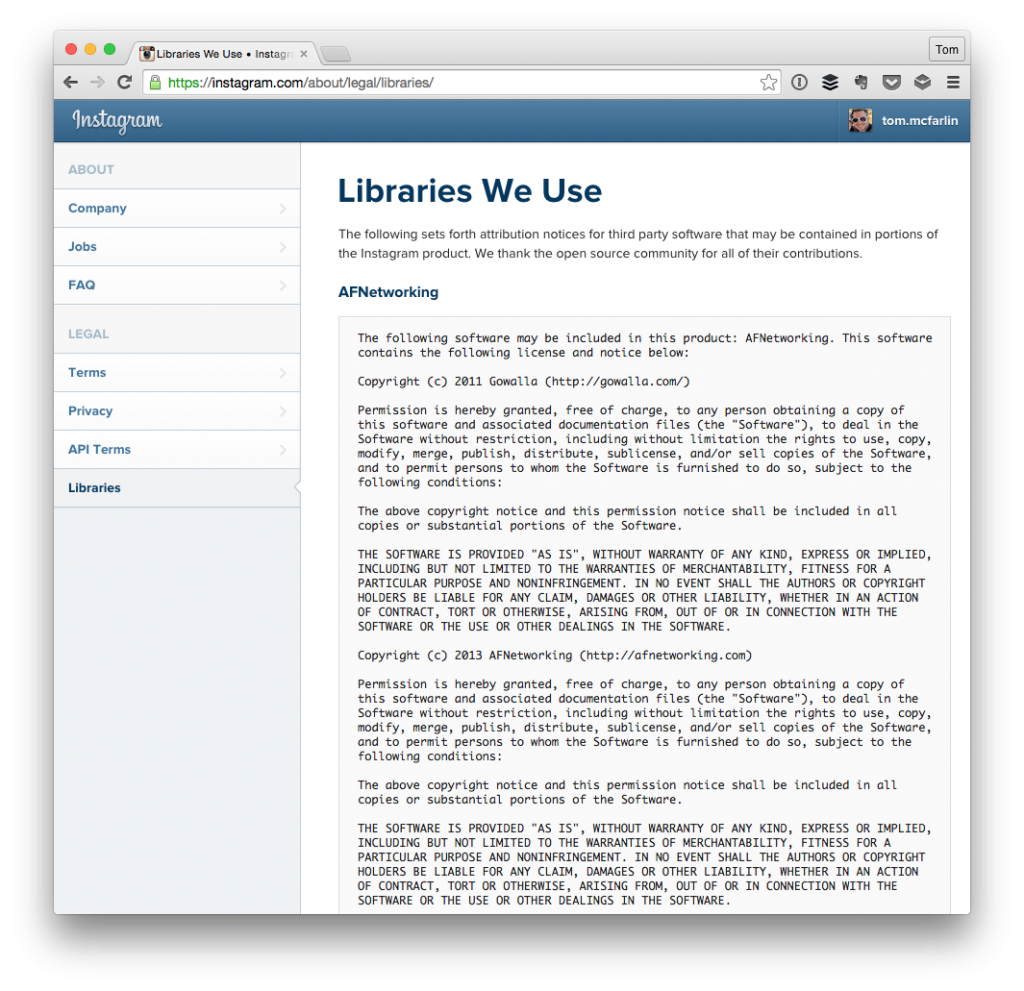

It’s a closed source application that uses third-party libraries several of which are open source:

“We thank the open source community for all of their contributions.”

And that’s also fine. Using other libraries rather than writing your own – under the right circumstances – is one mark of good software development.

But do they let you maintain the ownership of your images and videos? This isn’t meant to call out Instagram specifically, but any of the social sharing services that are currently available (especially those that are popular).

To be clear, applications like Instagram do let you maintain a copy of your image on your Camera Roll (or whatever equivalent application your phone or device calls it), but that image is now stored on a third-party server where it may or may not persist from the moment it’s shared and whether or not you delete your account.

And sure, the terms to which you agree – whether you’ve read them or not – are subject to change at any time such that perhaps the rights you have today are not the rights you have tomorrow.

Again, for what it’s worth, I use Instagram as an example not because I’m out to vilify them, but because they were the first example that came to mind.

Anyway, the more I began to think about data ownership as it relates to social applications, the cloud, and basically any other application, the more I began to realize that my problem isn’t that I want to own all of my data – I mean, of course I want to own all of my data – but that I’m skeptical as to what some services may do with the data that I give them.

In this case, I’m specifically talking about social networking services.

Continue reading